Katalon TestCloud transformed Katalon's fragmented on-premise testing tools into one cohesive, cloud-native test execution platform. As the Product Manager, I led the initiative to build TestCloud from scratch and integrate it directly with Katalon Studio, our flagship test automation solution. My dual objectives were to empower our 30K+ enterprise users with rapid, parallel test execution capabilities across browsers, devices, and operating systems while implementing FinOps practices to reduce cloud costs, optimize infrastructure spending, and enable a value-based pricing strategy that maximized ROI.

Beta adoption rate from our 30K+ enterprise users.

Test cycle times reduced with uptime maintained at 99.9%.

Cloud cost reduction achieved.

Cloud cost forecasting accuracy improved.

Katalon's enterprise customers (QA engineers) were struggling with siloed, on-premise testing solutions that couldn't scale with their CI/CD pipelines. Our research revealed QA engineers spent up to 40% of their time managing test environments rather than creating test cases. Large enterprises like Nvidia needed to run tests across 35+ browser/OS combinations but lacked the infrastructure to do so efficiently. Meanwhile, our internal cloud spending was unpredictable due to inefficient resource utilization and inconsistent cost allocation across test environments.

Historical data from AWS Cost Explorer and Datadog revealed recurring inefficiencies in our testing infrastructure, including overprovisioned resources during off-peak hours and underprovisioned capacity during peak testing periods. This directly impacted both our product performance and budgeting accuracy. Our analysis showed idle test environments were consuming nearly 30% of our cloud budget – a significant waste of resources.

Additionally, competitors like BrowserStack and LambdaTest were capturing market share with cloud-based solutions, though their high costs and limited integration with test automation platforms presented an opportunity. A major motivation for implementing FinOps was to optimize our cloud costs, which would enable us to create a usage-based pricing strategy for TestCloud that maximized value for both our customers and Katalon while maintaining our competitive edge.

Lead the 0-1 development, integration, and launch of TestCloud as an API-first, cloud-based test execution solution within the Katalon platform to enable parallel execution and seamless integration with popular CI/CD tools like Jenkins, GitHub Actions, Azure DevOps, and CircleCI.

Engage cross-functional teams (engineering, finance, DevOps) to establish a cost governance framework that provides real-time visibility, reduces cloud spending, and improves forecasting accuracy to support sustainable growth.

Optimize cloud costs to inform a consumption-based pricing strategy that aligns resource usage with customer value and positions TestCloud competitively against alternatives like BrowserStack, LambdaTest, and Sauce Labs.

I began with comprehensive discovery, conducting in-depth analysis of 40+ user workflows and running JTBD workshops with QA teams from key enterprise accounts. This research revealed three critical requirements: seamless integration with existing CI/CD pipelines, support for a wide range of browsers and operating systems, and real-time execution insights. Using Opportunity Solution Trees, I prioritized parallel test execution as our core value proposition, capable of reducing testing time by up to 50% for enterprise clients. I also ran Agile two-week sprint cycles with daily standups to ensure rapid iteration across distributed teams.

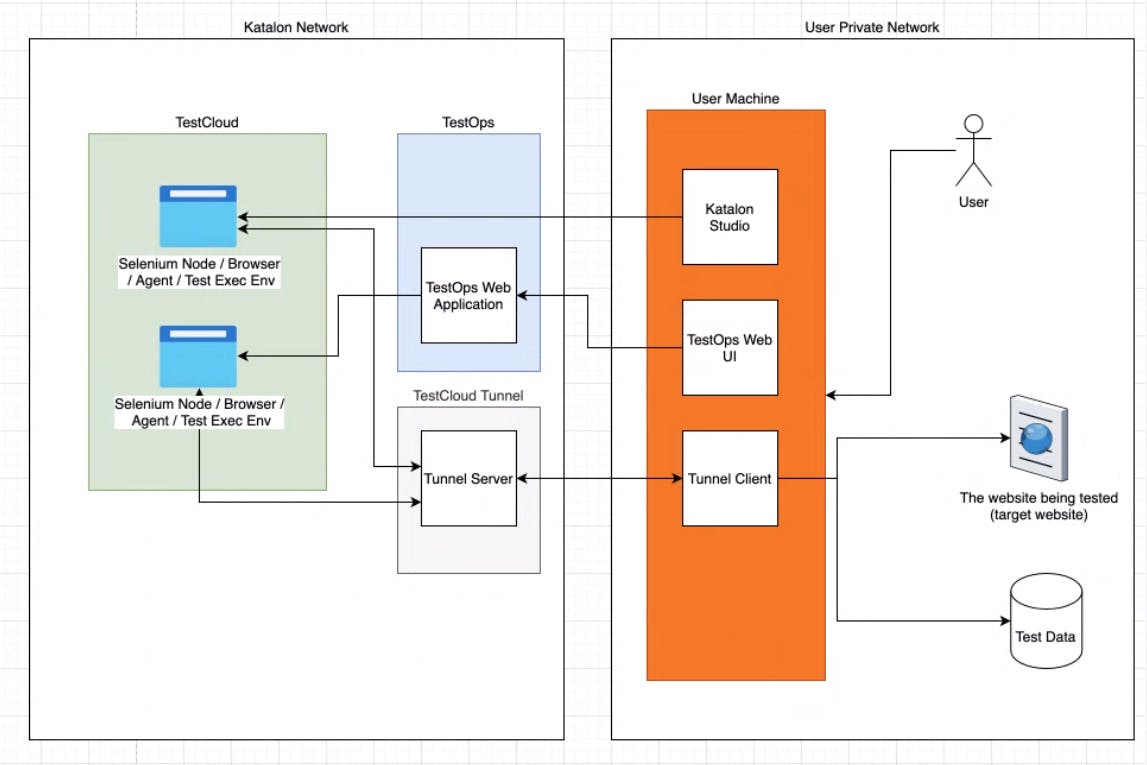

Working with Katalon Studio's architects, I designed TestCloud with a microservices architecture on AWS, using ECS for containerization, EC2 for browser instances, and S3 for artifact storage. We implemented auto-scaling capabilities to handle variable test loads and designed an API-first approach that enabled seamless integration with Katalon Studio's existing Record & Playback, Object Repository, and Test Case Management capabilities.

For the technical implementation, I authored comprehensive PRDs defining both functional requirements (parallel execution, cross-browser testing, real-time reporting) and non-functional requirements (99.9% availability, enterprise security, compliance with SOC 2 Type II). My North Star Metrics Dashboard tracked KPIs like parallel test utilization, browser compatibility coverage, and execution speed, guiding our biweekly roadmap refinements.

I designed a phased beta program targeting 20 strategic enterprise accounts with specific testing challenges we could solve. Bi-weekly feedback sessions revealed key friction points – particularly around migration from existing testing infrastructures – which we addressed through pre-launch enhancements to our onboarding experience.

To support seamless adoption, I created detailed migration guides and documentation for popular test frameworks (Selenium and Katalon's KS format) and established a dedicated support channel for beta users. This comprehensive approach contributed to our impressive 90% beta adoption rate.

After conducting competitive analysis against BrowserStack ($129-$399/month), LambdaTest ($15-$200/month), and Sauce Labs ($49-$549/month), I developed a consumption-based pricing model that offered better value for heavy usage patterns typical of enterprise clients. This model aligned with enterprise budgeting cycles and provided predictable costs for annual planning.

My go-to-market execution included crafting persona-specific value propositions targeting QA Managers (reduced testing time), DevOps Engineers (CI/CD integration), and CTOs (cost savings). I created sales battle cards highlighting concrete advantages over competitors and developed ROI calculators that demonstrated tangible time and cost savings compared to on-premise solutions or competing cloud services.

The FinOps implementation began with a thorough analysis of historical cloud spending using AWS Cost Explorer and Datadog. I identified that browser instances and VM provisioning represented over 70% of our cloud costs, with significant waste from idle resources.

Working with our DevOps team, I mapped key metrics like "Cost per Test Execution" and "Cost per Parallel Run" to establish baselines and identify optimization opportunities. Using these insights, we implemented:

I enforced mandatory resource tagging via Terraform with clear namespaces (env:, customer:, feature:) for granular cost allocation. This enabled our centralized Looker dashboard to provide real-time visibility into cloud spending by feature, customer segment, and test type.

To build financial accountability, I implemented a progressive approach, starting with a showback model to raise awareness across engineering teams, then transitioning to a chargeback model that assigned financial responsibility to product teams. AWS Budgets with automated alerts prevented cost anomalies before they impacted our margins.

Our regular cross-functional FinOps reviews brought together engineering, finance, and product teams to evaluate spending patterns and optimization opportunities. Using AWS Forecast with our historical data, we improved budgeting accuracy by 40%, allowing for more precise pricing and resource planning.

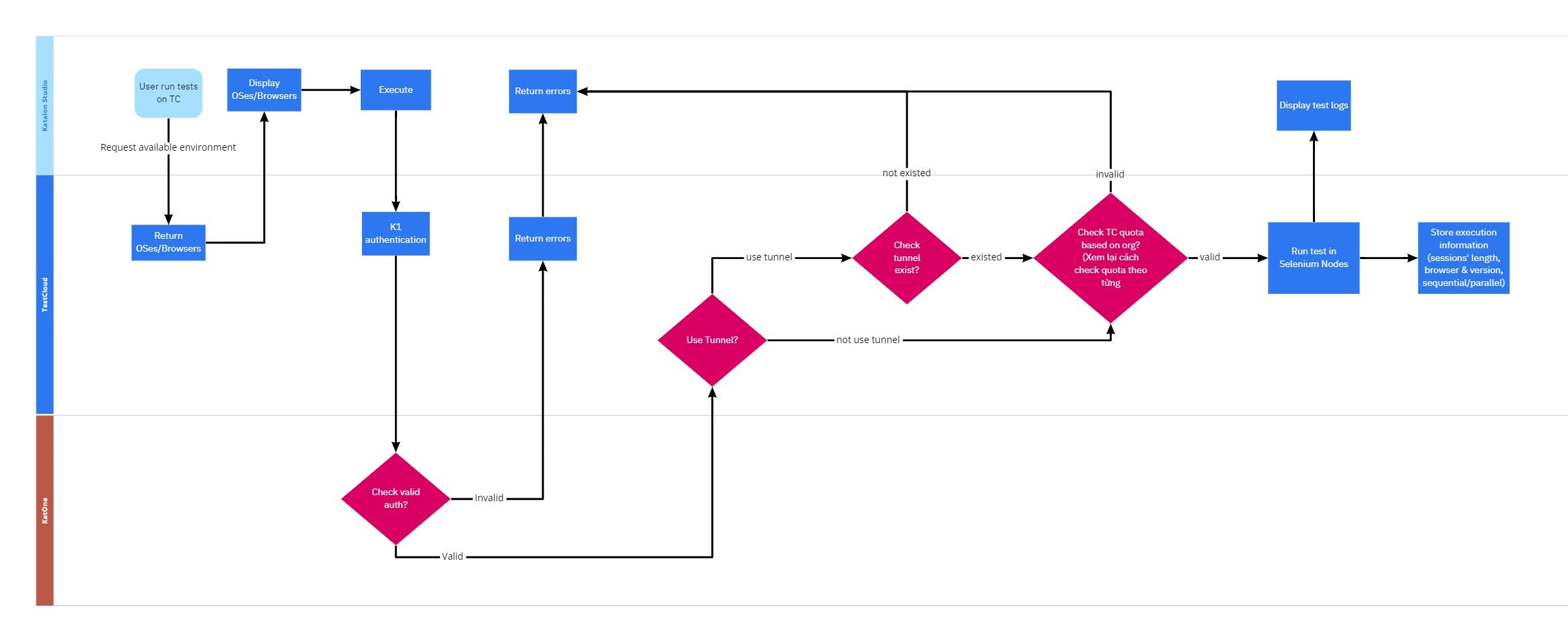

These are some of TestCloud's high-level architecture and workflow diagrams. When integrated into Katalon Studio, TestCloud Tunnel enables a secure connection from the TestCloud server to local resources for our users, QA engineers, to test applications in a restricted environment.